Purpose: The purpose of this article is to illustrate how various Machine Learning algorithms apply in the context of real world business scenarios for Microsoft Dynamics

Scenario: In my previous articles I described an Enterprise IoT (Internet of Things) scenario as depicted on the diagram below

In this article in a very simple terms I’ll explain how to apply Machine Learning to implement your own scenarios for Microsoft Dynamics. In my experiments I’ll leverage Microsoft Azure Machine Learning cloud service. Please find more information about Microsoft Azure Machine Learning here: https://azure.microsoft.com/en-us/services/machine-learning/

Machine Learning is a scientific discipline that explores the construction and study of algorithms that can learn from data. Please find more information about Machine Learning here: https://en.wikipedia.org/wiki/Machine_learning

In particular, you can use Machine Learning algorithms to predict the future trends, better structure your knowledge in a certain domain and gain some meaningful business insights

The basic principle is that you first teach the system by feeding it some data (or by other words, the systems learns from the data you provide) and then apply appropriate mathematical algorithm for a particular task to get to the result. Using Microsoft Azure Machine Learning you don’t need to implement a respective mathematical apparatus (no coding), instead you just use Microsoft Azure Machine Learning Studio drag-n-drop design area to assemble your own experiments. Please note that it is important to have a reliable dataset and know your data structure to achieve great outcomes from using the power of Machine Learning

For the sake of simplicity, we’ll divide Machine Learning algorithms into 2 broad categories

Supervised learning:

< - Regression

< - Classification

Unsupervised learning:

< - Clustering

< - Anomaly detection

In Supervised learning scenarios you will typically know exactly what you are trying to predict. For example, numerical or non-numerical values predictions. In Unsupervised learning scenarios you may be lacking a good enough understanding of the problem domain, in fact typically knowing roughly what you may be looking for, thus you may be relying on the system to provide some hints to gain a better understanding of the problem domain. For example, grouping info in logical way or detecting deviations from normal system behavior

Currently Microsoft Azure Machine Learning delivers a multitude of algorithms in 4 main categories

< - Regression

< - Classification

< - Clustering

< - Anomaly detection

Regression: In statistics regression analysis is a statistical process for estimating the relationships among variables. Regression analysis is widely used for prediction and forecasting

Example: Machine Log (Predicting numeric values)

Classification: In statistics classification is the problem of identifying to which of a set of categories a new observation belongs on the basis of a training set of data containing observations whose category membership is known

Example: Machine Log (Predicting non-numeric values)

Clustering: Clustering is the task of grouping a set of objects in such a way that objects in the same group (called a cluster) are more similar (in some sense or another) to each other than to those in other groups (clusters)

Example: Machine Log (Grouping information)

Anomaly detection: In data mining, anomaly detection (or outlier detection) is the identification of items, events or observations which do not conform to an expected pattern or other items in a dataset

Example: Operator Log (Erroneous data entry)

Now knowing the general purpose of each type of algorithms we’ll review them one by one with examples

First off in Microsoft Azure Machine Learning Studio I’ll create 4 experiments for 4 types of algorithms as shown below

Experiments

To keep it simple the data I’ll be using is in form of Excel spreadsheets, and I’ll upload respective Excel spreadsheets as datasets as shown below

Datasets

In order to create a new dataset, it is simple to use a dialog as shown below

New dataset

Creating a new experiment is also simple

New experiment

First Machine Learning algorithm we review will be Regression algorithm

Regression

In this experiment we’ll consider make-to-order Manufacturing environment and Machine Log dataset generated by equipment/machinery on the shop floor. The actual data will represent what we produced over the period of time. The goal will be to predict what will be produced in the future based on historical production data representing customer demand. By other words, we’ll be predicting numeric values representing volumes of production in the future

Excel spreadsheet with the data for Regression experiment looks like below

Dataset

I highlighted with Green the values I’d like to predict based on historical data. Qty for the last 5 rows are not known yet, but I do know what I have already produced previously. Please note that my dataset is very small but yet descriptive enough for the explanation. For simplicity I’m also making a logical assumption that customer demand is repeatable, otherwise to get more accurate results I’d have to use a larger dataset and/or a different algorithm

The model I built in Microsoft Azure Machine Learning Studio for Regression experiment looks like below

Model

Here’s the list of building blocks with explanations

Element

|

Purpose

|

Dataset

|

Excel spreadsheet with historical production data

|

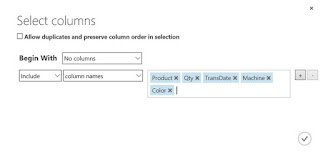

Project columns

|

Please note that I specified meaningful variables (Product, Qty, TransDate, Machine, etc.) which in my opinion correlate, by other words interdependent, for training the system

|

Split data

|

Please note that I use 84% of the data to train the system and the rest of 16% of the data (the last 5 rows highlighted with green) for prediction

|

Linear Regression

|

Please note that I’m using a simple Linear Regression algorithm to identify a trend and predict the future data (Qty produced)

|

Train model

|

Please note that I explicitly specify what data I’m trying to predict (Qty produced) during training of the model

|

Score model

|

After training the model I’ll be scoring the model to come up with predictions (Qty produced)

|

Evaluate model

|

Once model has been scored I can also check the accuracy of my prediction in case I knew actuals. Typically, evaluation is done after prediction as time passes and we know the actuals

|

Once I run the model I can then visualize the results

Visualize

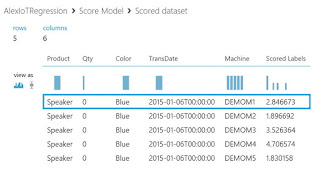

The result will be presented in Scored labels column as shown below

Result

You can also review evaluation results as shown below

Evaluation results

Now as we have historical data and predictions data we can visualize this data using Business intelligence tools and services like Microsoft Excel Power View and Microsoft Power BI

Business Intelligence

The next algorithm we review will be Classification algorithm

Classification

In this experiment we’ll consider make-to-order Manufacturing environment and Machine Log dataset generated by equipment/machinery on the shop floor like in the previous experiment. In fact, this time I’ll add additional piece of information regarding the priority of an order = Low, Medium, High. The actual data will represent what we produced over the period of time and also how we prioritized our work in the past. The goal will be to predict what production will likely to be prioritized in the future based on historical production data representing customer demand and past priorities. By other words, we’ll be predicting non-numeric values representing priorities for production orders from the list of {Low, Medium, High} in the future

Excel spreadsheet with the data for Classification experiment looks like below

Dataset

I highlighted with Green the values I’d like to predict based on historical data. Priority for the last 5 rows are not known yet (by default they are set to Low now), but I do know how we prioritized production orders in the past. Please note that my dataset is very small but yet descriptive enough for the explanation. For simplicity I’m also making a logical assumption that customer demand is repeatable and some of the customers are more priority than others, otherwise to get more accurate results I’d have to use a larger dataset and/or a different algorithm

The model I built in Microsoft Azure Machine Learning Studio for Classification experiment looks like below

Model

Here’s the list of building blocks with explanations

Element

|

Purpose

|

Dataset

|

Excel spreadsheet with historical production data

|

Project columns

|

Please note that I specified meaningful variables (Product, Qty, TransDate, Machine, etc., Priority) which in my opinion correlate, by other words interdependent, for training the system

|

|

Please note that I use 84% of the data to train the system and the rest of 16% of the data (the last 5 rows highlighted with green) for prediction

| |

Multiclass decision forest

|

Please note that I’m using a Multiclass decision forest algorithm to identify a trend and predict the future data (Production order priority)

|

Train model

|

Please note that I explicitly specify what data I’m trying to predict (Production order priority) during training of the model

|

Score model

|

After training the model I’ll be scoring the model to come up with predictions (Production order priorities)

|

Evaluate model

|

Once model has been scored I can also check the accuracy of my prediction in case I knew actuals. Typically, evaluation is done after prediction as time passes and we know the actuals

|

Once I run the model I can then visualize the results

Visualize

The result will be presented in Scored labels column as shown below

Result

The interesting thing here is that not only the system gives me predictions of Priorities, but also how probable the prediction is for each Priority class, please notice Scored probabilities for Class “Low”, ”Medium” and ”High” with values [0 – 1]

Now as we have historical data and predictions data we can visualize this data using Business intelligence tools and services like Microsoft Excel Power View and Microsoft Power BI

Business Intelligence

Next algorithm in line is Clustering algorithm

Clustering

In this experiment we’ll consider Manufacturing environment and Machine Log dataset generated by equipment/machinery on the shop floor like in the previous experiment. In fact, this time the information I have describes OEE data (Overall Equipment Efficiency) for number of successful runs, failures, power outages, maintenance requests from machines. The goal will be to group machines into 2 groups: machines performing well and machines potentially in a need of a maintenance. By other words, we’ll implement a simple predictive maintenance model

Excel spreadsheet with the data for Clustering experiment looks like below

Dataset

To simplify the data representation, I will aggregate the values by using Microsoft Excel Power Pivot

I highlighted with Green the values for the number of successful runs per machine, and with Red – the number of failed runs per machine. The challenge I have is that by looking at this data it is hard to say which machines are performing well and which are performing not well, especially if you’d be looking at the huge dataset. In fact, what I know is that I want 2 groups (2 clusters) of machines as the result – “Well performing” machines and “Non-well performing” machines. The trick is in what meaningful variables I’ll use for this experiment – they will define what clusters I’m going to get as the result

The model I built in Microsoft Azure Machine Learning Studio for Clustering experiment looks like below

Model

Here’s the list of building blocks with explanations

Element

|

Purpose

|

Dataset

|

Excel spreadsheet with historical OEE data

|

Project columns

|

Please note that I specified meaningful variables (Machine, Success, Failure) which in my opinion correlate, by other words interdependent, for training the system. What it really means is that I expect Success-Failure ratio per machine to greatly help me group machines into “Well performing” machines and “Non-well performing” machines

|

K-Means Clustering

|

Please note that I’m using a K-Means Clustering algorithm to group the data into 2 clusters (Number of centroids = 2) in accordance with set up correlation of meaningful variables

|

Train Clustering model

|

Please note that I explicitly specify what data will participate in Clustering process (Machine, Success, Failure) during training of the model

|

Assign to Clusters

|

Please note that the system will assign the data to groups (clusters) based on the meaningful variables (Machine, Success, Failure)

|

Resulting dataset

|

Once the result is obtained I’ll present it in a form of a separate dataset

|

After I run Clustering experiment I’ll save the result as a separate dataset as shown below

Save as dataset

Save output as a new dataset

Now I can visualize this newly created dataset as shown below

Visualize

The result will be presented in Assignments column as shown below

Result

Please note that the system is also providing me with how far my results stand from each other centered around groups center (Distance to cluster center) which represent the accuracy of the result. In case your results are highly dispersed you may want to consider introducing an additional cluster(s) for more accurate results if this makes sense in the context of the business task

Now if we visualize obtained results in Excel the data makes a perfect sense. Thus machines grouped into Cluster 0 are “Well-performing” machines with a good Success-Failure ratio (X = (Success/Failure) > 1) and machines grouped into Cluster 1 are “Non-well performing” machines with a bad Success-Failure ration (X = (Success/Failure) < 1)

Performance analysis

This provides a very meaningful insight into shop floor operations because now I know what machines will require a predictive maintenance and thus I can avoid costly machinery breakdowns

Please note that while you are designing your experiment the system will assist you with warning if you are doing something wrong. For example, this is how one of warnings may look like if you forget to assign certain variables to a shape

Logical exception

The last one in consideration today is Anomaly detection algorithm

Anomaly detection

In this experiment we’ll consider Manufacturing environment and Operator Log dataset produced during shop floor operations. The dataset does look similar to Machine Log dataset used for Regression and Classification experiments. What I’m looking for in this dataset is Operator errors, for example, potentially registering an anomaly large and small quantities of products produced. Please note that I highlighted with Red one of Operator errors (Qty = -9999) which I’m going to catch with the help of Machine Learning Anomaly detection algorithm

Excel spreadsheet with the data for Anomaly detection experiment looks like below

Dataset

The model I built in Microsoft Azure Machine Learning Studio for Anomaly detection experiment looks like below

Model

Here’s the list of building blocks with explanations

Element

|

Purpose

|

Dataset

|

Excel spreadsheet with historical operator data

|

Project column

|

Please note that I specified meaningful variables (Customer, Product, Qty) which in my opinion correlate, by other words interdependent, for training the system

|

Split data

|

Please note that I use 84% of the data to train the system and the rest of 16% of the data (the last 5 rows highlighted with green) for analysis

|

One-class support vector machine

|

Please note that I’m using a One-class support vector machine algorithm to analyze the data for anomalies

|

Train anomaly detection model

|

The model will be trained on the historical data

|

Score model

|

After training the model I’ll be scoring the model to come up with caught anomalies if any detected

|

Once I run the model I can then visualize the results

Visualize

The result will be presented in Scored labels column as shown below

Result

Please note that Qty = -9999 anomaly was detected as Scored labels column value for that row is 1. Also the system provides a relative probability for this to be an anomaly in Scored probability column

Please note that while you are designing your experiment the system will assist you with warning if you are doing something wrong. For example, this is how one of warnings may look like if you try to assign a variable of a wrong type to a shape

Logical exception

We reviewed 4 categories of Machine Learning algorithms now

The next step will be to think about how do we practically consume Microsoft Azure Machine Learning service from the cloud. Luckily it is very easy to convert a Machine Learning experiment into a Web Service as shown below

Web Service (Regression)

A brand-new experiment will be created (Predictive Web Service)

Experiments

Web Services

You can switch to Web Service experiment mode to see Web Service Input and Web Service Output ports

Experiment

Web Service Input Port

Web Service Output Port

On the dashboard you can find associated technical details about how to invoke Web Service as well as try it out (Test)

Dashboard

REQUEST/RESPONSE

Request URI (POST):

Sample request will look like below

Sample request

{

"Inputs": {

"input1": {

"ColumnNames": [

"Order",

"Product",

"Qty",

"Color",

"Size",

"Batch",

"Serial",

"TransDate",

"Machine"

],

"Values": [

[

"value",

"value",

"0",

"value",

"value",

"value",

"value",

"",

"value"

],

[

"value",

"value",

"0",

"value",

"value",

"value",

"value",

"",

"value"

]

]

}

},

"GlobalParameters": {}

}

|

Please note that all data elements we manipulated with are available as a part of the message format

Sample response looks like below

Sample response

{

"Results": {

"output1": {

"type": "DataTable",

"value": {

"ColumnNames": [

"Product",

"Qty",

"Color",

"TransDate",

"Machine",

"Scored Labels"

],

"ColumnTypes": [

"String",

"Numeric",

"String",

"Object",

"String",

"Numeric"

],

"Values": [

[

"value",

"0",

"value",

"",

"value",

"0"

],

[

"value",

"0",

"value",

"",

"value",

"0"

]

]

}

}

}

}

|

We will definitely be interested in working with the result represented in Scored labels column

When Machine Learning experiment (as a service) is consumed via Web Service you will use Web Service requests and Web Service responses to send and receive messages as it was explained above

Below is the sample app code on how to consume Machine Learning experiment (as a service) in .NET

Sample code

// This code requires the Nuget package Microsoft.AspNet.WebApi.Client to be installed.

// Instructions for doing this in Visual Studio:

// Tools -> Nuget Package Manager -> Package Manager Console

// Install-Package Microsoft.AspNet.WebApi.Client

using System;

using System.Collections.Generic;

using System.IO;

using System.Net.Http;

using System.Net.Http.Formatting;

using System.Net.Http.Headers;

using System.Text;

using System.Threading.Tasks;

namespace CallRequestResponseService

{

public class StringTable

{

public string[] ColumnNames { get; set; }

public string[,] Values { get; set; }

}

class Program

{

static void Main(string[] args)

{

InvokeRequestResponseService().Wait();

}

static async Task InvokeRequestResponseService()

{

using (var client = new HttpClient())

{

var scoreRequest = new

{

Inputs = new Dictionary<string, StringTable> () {

{

"input1",

new StringTable()

{

ColumnNames = new string[] {"Order", "Product", "Qty", "Color", "Size", "Batch", "Serial", "TransDate", "Machine"},

Values = new string[,] { { "value", "value", "0", "value", "value", "value", "value", "", "value" }, { "value", "value", "0", "value", "value", "value", "value", "", "value" }, }

}

},

},

GlobalParameters = new Dictionary<string, string>() {

}

};

const string apiKey = "abc123"; // Replace this with the API key for the web service

client.DefaultRequestHeaders.Authorization = new AuthenticationHeaderValue( "Bearer", apiKey);

client.BaseAddress = new Uri("https://ussouthcentral.services.azureml.net/workspaces/942addd6f90b4b4e9c27990c45a5dd6a/services/526fc0cacbf94379a06e2be99497092f/execute?api-version=2.0&details=true");

// WARNING: The 'await' statement below can result in a deadlock if you are calling this code from the UI thread of an ASP.Net application.

// One way to address this would be to call ConfigureAwait(false) so that the execution does not attempt to resume on the original context.

// For instance, replace code such as:

// result = await DoSomeTask()

// with the following:

// result = await DoSomeTask().ConfigureAwait(false)

HttpResponseMessage response = await client.PostAsJsonAsync("", scoreRequest);

if (response.IsSuccessStatusCode)

{

string result = await response.Content.ReadAsStringAsync();

Console.WriteLine("Result: {0}", result);

}

else

{

Console.WriteLine(string.Format("The request failed with status code: {0}", response.StatusCode));

// Print the headers - they include the requert ID and the timestamp, which are useful for debugging the failure

Console.WriteLine(response.Headers.ToString());

string responseContent = await response.Content.ReadAsStringAsync();

Console.WriteLine(responseContent);

}

}

}

}

}

|

Let’s quickly test how it works. All we need is to enter the input data

Test

And review the outcome

Test results

Test results (text)

'AlexIoTRegression [Predictive Exp.]' test returned ["Speaker","0","Blue","1/6/2015 12:00:00 AM","DEMOM1","2.84667311803141"]...

|

Result: {"Results":{"output1":{"type":"table","value":{"ColumnNames":["Product","Qty","Color","TransDate","Machine","Scored Labels"],"ColumnTypes":["String","Int32","String","DateTime","String","Double"],"Values":[["Speaker","0","Blue","1/6/2015 12:00:00 AM","DEMOM1","2.84667311803141"]]}}}}

|

Please note that Scored labels column will contain the result I’m looking for which also corresponds to the result I obtained manually earlier

Test results

Now we know how to use Microsoft Azure Machine Learning service, moreover also how to develop apps using it as a service. But we don’t need to go far to find examples in the applications you’ve already been using. One of the examples will be Demand Forecasting functionality in Microsoft Dynamics AX which essentially is an implementation of Machine Learning on practice

Manufacturing example: Demand Forecasting in Microsoft Dynamics AX

The general idea is that using historical data in Microsoft Dynamics AX you may predict the future customer demand, put the results of forecasting into Excel for ease of manipulation and adjustments, and then reintroduce the final result of forecasting as sales forecast back to Microsoft Dynamics AX to be further used during planning (MRP)

This is how Forecast algorithm default parameters look like in Microsoft Dynamics AX

Forecast algorithm default parameters

Please note that there’re 3 auto-regression based Microsoft Time Series algorithms available {ARTXP, ARIMA, MIXED} for Demand Forecasting in Microsoft Dynamics AX

In essence Machine Learning algorithm schema will look like the following (right hand side)

Machine Learning algorithm schema

First you train the model on the data, then you score the model to come up with predictions, and finally you can evaluate the model for accuracy against actuals (when they are available for comparison)

If we were to translate the canonical Machine Learning algorithm schema into Demand Forecasting terms in Microsoft Dynamics AX it would look like on the diagram above (left hand side). We train and score the model based on historical transactions during Generate statistical baseline forecast step, then Excel file with the resulting forecast is generated and can be adjusted as necessary. Then we further transact in the system and in some time we can evaluate the model for accuracy during Calculate demand forecast accuracy step

Let’s come back from practice to theory again to better cement our knowledge of the subject

Microsoft Time Series algorithms

Microsoft Time Series algorithms are regression algorithms that are well optimized for the forecasting of continuous values, such as product sales, over time

In statistics, regression analysis is a process for estimating the relationships among variables. At this point you can reflect on my earlier explanations about usage of meaningful variables in Machine Learning experiments

Historical vs Predicted

There’re certain prerequisites for Time Series algorithms to be used. Here’s what is minimally required: a single key time column (DateKey), a predictable column (TransactionQty), an optional series key column. Not surprisingly you can find all these data in Excel spreadsheet used for Demand Forecasting

Excel

Please find more information about Microsoft Time Series algorithms here: https://msdn.microsoft.com/en-us/library/ms174923.aspx

In order to see how Microsoft Time Series algorithms work I’ll provide a quick example. The idea is that using standard Demo VM for Microsoft Dynamics AX I ran 3 Demand Forecasting experiments using different algorithms {ARTXP, ARIMA, MIXED} on the same dataset (LProducts item allocation key in USMF company) and obtained a slightly different results. Below I provide analysis of the results I did in Excel

Excel analysis

Please note that practically ARTXP algorithm is more suited for short-term prediction, it is more sensitive for deviations of the demand, so on the picture above it is more reactive (Red line). ARTXP may be over-reactive though if used (or misused) for long-term prediction. ARIMA algorithm practically is more suited for long-term prediction, it is more resistant for deviations of the demand, so on the picture above it is represented with more straight line (Blue line). ARIMA may be too resistant though if used (or misused) for short-term prediction. MIXED algorithm combines the best of both worlds and typically adapts well to either short-terms prediction or long-term prediction as necessary. In my experiment MIXED is closer to ARIMA based on the results (Green line)

It is very important to have a reliable dataset to train the system on. On practice the dataset may not be very accurate or it may contain outliers which may skew the results of predictions. Getting back to Microsoft Dynamics AX Demand Forecasting, outliers may be caused by different kinds of artificial non-representative demand, for example, retail promotions boosting up the sales. For the best prediction results it would be ideal to be able to get rid of outliers and only keep natural representative demand in consideration for prediction. For this purpose, you may actually want to combine Regression algorithm for demand forecasting with Anomaly detection algorithm for outliers detection. This is how Machine Learning algorithms may work in concert to deliver the best results.

In Microsoft Dynamics AX you may remove outliers based on predefined rules. For example, you may introduce a rule (query) to remove all high volume single transaction demand (based on quantity threshold) from consideration

Outlier removal

Outlier removal query

Please note that this query is configurable, thus you may introduce ranges per product, transaction type, transaction status, quantity, etc.

Another great example would be product recommendations for cross-sell or up-sell scenarios in retail. Please note that there’re numerous Machine Learning models delivered as service in Microsoft Azure cloud from Microsoft Azure Marketplace: http://datamarket.azure.com

Retail example: Product recommendations engine in Microsoft Dynamics AX Retail MPOS

Recommendations API is an example built with Microsoft Azure Machine Learning that helps your customer discover items in your catalog. Customer activity in your digital store is used to recommend items and to improve conversion in your digital store. The recommendation engine may be trained by uploading data about past customer activity or by collecting data directly from your digital store. When the customer returns to your store you will be able to feature recommended items from your catalog that may increase your conversion rate. Microsoft Azure Machine Learning’s Recommendations includes Item to Item recommendations: a customer who bought this also bought that and Customer to Item recommendations: a customer like you also bought that. You can find Recommendations Machine Learning model in Microsoft Azure Marketplace: http://datamarket.azure.com/dataset/amla/recommendations

In order to start using Machine Learning model from Microsoft Azure Marketplace you first need to sign up

Sign up

For the sake of this walkthrough I signed up for a free trial

Trial

Once you signed up you can start using the model. The easiest way to understand what Recommendations Machine Learning model can do for you is to explore provided dataset

Explore this dataset

Please note that there’re numerous functions/methods available for interaction with the model. Please consider downloading a sample application for a quick start from here: http://1drv.ms/1xeO2F3

The dataset used is a catalog of books. The idea is simple: we have a list of books in the catalog, and we also have an information about what books people purchase(d), this is enough information to predict for a person purchasing a book what other books this person may also be interested in purchasing

Catalog of books

Usage patterns

Please note that this is just a sample dataset for illustration purposes and you can use any data you would like for your particular scenarios. You can now use the sample app with your unique credentials to test Recommendations Machine Learning model

After downloading the sample app and substituting email and accountKey with my real values I can see the model in action

Source code

…

public static void Main(string[] args)

{

/*

if (args.Length != 2)

{

throw new Exception(

"Invalid usage: expecting 2 parameters (in this order) email and DataMarket primary account key");

}

*/

var email = "your@email.com";//args[0];

var accountKey = "XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX";//args[1];

Console.WriteLine("Invoking Azure ML Sample app for user {0}\n", email);

…

|

Please note that for simplicity I’m launching the app without arguments instead I provided my credentials explicitly in the code, that’s why I commented out a few lines of code

Output

Please note that the app goes through the series of steps to get to the result

< - Creating model container

< - Importing catalog and usage data

…

< - Getting some recommendations

Please note that when using in production your model (model container) will be already created in the cloud and pre-trained on the data, so you will get to Recommendations right away

As the result when purchasing one book (“Clara Callan”) I’ll be advised to look at another one (“Restraint of Beasts”) as shown above. Here’s the IDs which were used during this experiment

Model: “96d0410a-8926-4b33-b354-fb5b8e86cd56” (“Recommendations”)

Item: “2406e770-769c-4189-89de-1c9283f93a96” (“Clara Callan”)

Now I can get the same result by using user interface on the web page

Explore this dataset (experiment)

Please note that I selected “ItemRecommend” function/method to be invoked and specified appropriate parameters for modelId and itemId(s). As expected the result will be exactly what I got from executing sample app earlier

Result

Machine Learning algorithm schema for Recommendations engine remains the same. First we train the model by feeding it with data (books catalog and usage), then we come up with predictions/recommendations as we score the model. Finally, you may also validate the accuracy of predictions/recommendations by comparing them to what people ultimately purchased

Machine Learning algorithm schema

You can find a great practical application of Microsoft Azure Machine Learning Recommendation engine when used as a part of Microsoft Dynamics AX Retail POS

Retail MPOS (Modern POS)

Please note that on the screenshot above Related products are predefined static products associated with a certain product in the setup, and Recommended products are dynamically recommended products by Microsoft Azure Machine Learning Recommendation engine based on history of customer purchases

Summary: In this article we learned about how Machine Learning algorithms apply in the context of real world business scenarios for Microsoft Dynamics, specifically, we considered Manufacturing Demand Forecasting and Retail Product Recommendations scenarios. After reading this article you should be able to implement your own scenarios using Microsoft Azure Machine Learning

Tags: Microsoft Azure, Machine Learning, Microsoft Dynamics AX, Manufacturing, Demand Forecasting, Retail, Product Recommendations, POS, Supervised Learning, Unsupervised Learning, Regression, Classification, Clustering, Anomaly detection, Web Services, Train model, Score model, Evaluate model.

Note: This document is intended for information purposes only, presented as is with no warranties from the author. This document may be updated with more content to better outline the issues and describe the solutions.

Author: Alex Anikiev, PhD

Great tutorial to get one started with Azure ML!

ReplyDeleteThank you for this Alex. As a noob to the field of machine learning this was very helpful.

ReplyDeleteHowever, I'm still a noob. Not proficient enough to create complex models yet. So I have a challenge for you. Can you apply concepts similar to your exercises in this article to a common manufacturing problem associated with production cycle times in AX2012?

As with most ERP systems, we know that cycle times entered in production routes for standard manufacturing, or with production flows in lean manufacturing, are predicated on best guesses. Once we start to accumulate actual production times, we have data which could help use to refine those guesses. But this is not something that AX provides in a simple and transparent manner. I would think that machine learning could readily address this - to both differentiate cycle times by resource/operation that are within an acceptable range from those that may need attention and to make suggestions when a delta between the guess and the actual fall outside of an acceptable range.

What do you think, worth some of your time?